What I learnt

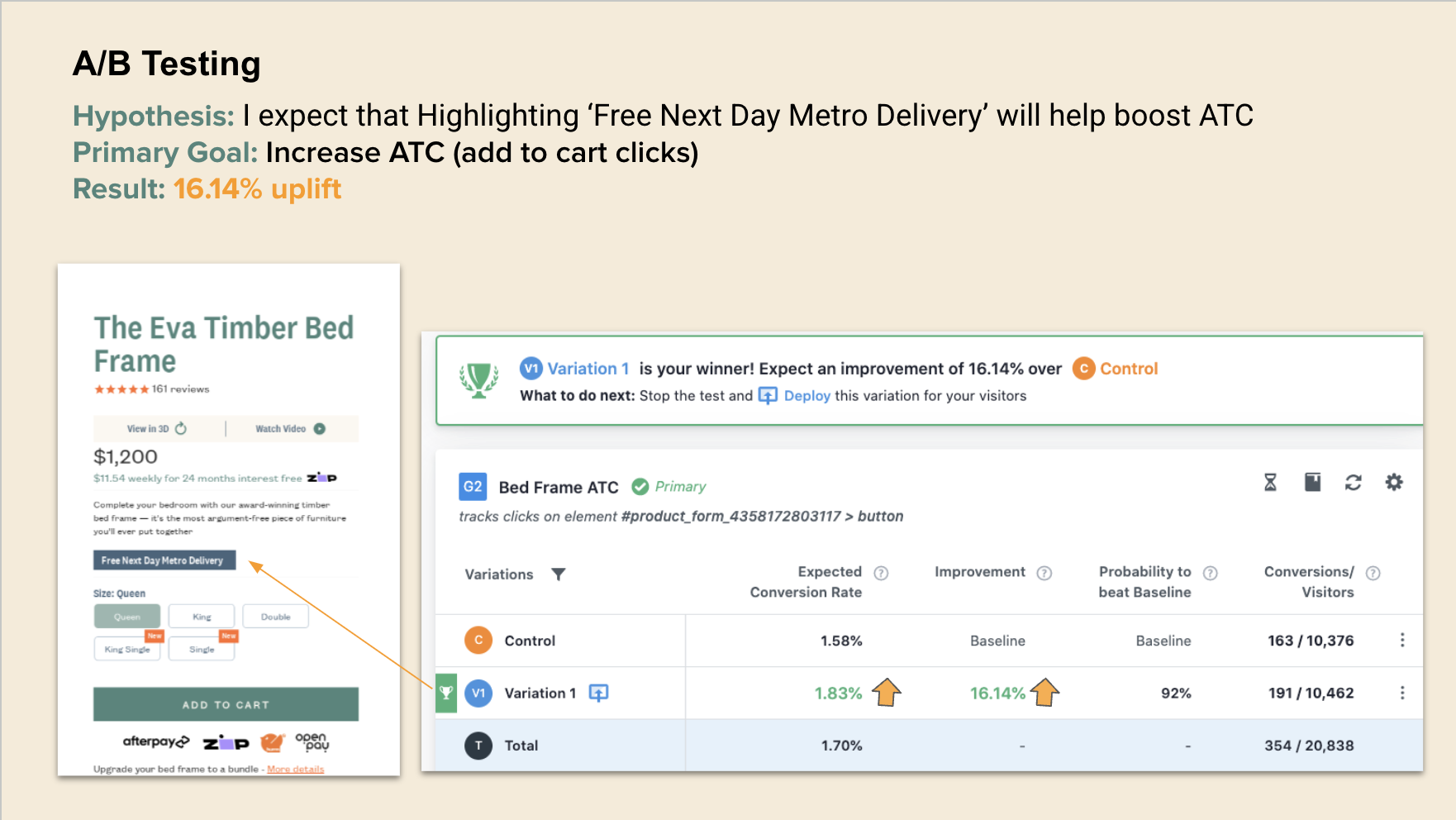

Not all tests will lead ot a winning result. If I were to put rough ratio to the amount of wins versus losses I'd say it was roughly 1 to 4, whereby 1 out 4 tests were winners. The misconception I often faced was a losing test was a wasted effort. It was common to have people who had a limited understanding of A/B testing assume a failed test was bad. What I learnt and eventually began to teach was that the activity of testing in an of its self, regardless of the wins and losses is always good.

Why is it always good? To put simply A/B testing provides the oppurtunity to not only learn and grow, but also prevents costly mistakes.

It is better to test a new idea, rather then launch it and assume its having a positive impact. If you can't measure it then your essentially shooting for targets in the dark. The occasional wins were always great and looked impressive, but I learnt that they should not overshadow the tests that wern't sucessful, as these were equally important to sustaining and protecting Eva's growth.

In the first 6 months of our CRO strategy, we began to encounter tests that required more time to elicit a statistical significant result (We set our stat sig numbers to 95%, meaning there is a 95% chance that the observed difference is real and not due to random variation). This meant running tests taht took up to 2 months. Which in some cases would ultmately end up being inconclusive.

The problem we faced, was seeking a statistical significant result on every test drastically slowed down our frequency of tests, and we were forced to wait long periods of time before running new ones. To combat this, I implemented a strategy that looked at 'probability over a period of time'. If the probability of success was above 80% or therabouts, over a two week period with a solid number of test users accumulated. We were comfortable rolling the variation out.

The thinking was if a test was a having generally positive impact, and not showing any significant drops. Then we should feel confident rolling it out. Of course the aim was always to strive towards 'Stat Sig' results. But ultimately we wanted to be able to run tests, and run tests frequently, and so this approach helped us move efficiently and at speed.

We kept it lean at Eva, and from a CRO perspective this helped us move at lightning speed. It was me on the CRO strategy/analysis/design along with a absolute gun of a developer that helped implement new features and tests. I acknoledge there are pros and cons to this approach. But my experience was less cooks in the kitchen combined with trust and autonomy lead to focus and quicker results!

In terms of taking everyone on the journey - on a monthly basis I provided Slack updates on our notable test results. They were quick, they were dirty but they did the job. It helped everyone from marketing, support, and product development, get a snapshot of what we were acheiving. It also added to the culture of testing and experimentation.